By ZDNet

The challenges of making a handheld device receive several simultaneous signals from sub-millimeter wave frequencies remain extraordinary. They’re as daunting, and perhaps as seemingly insurmountable, as the ones NASA engineers faced in the mid-1960s after the Apollo 1 fire, as they raced to catch up with President John F. Kennedy’s end-of-the-decade deadline for a safe moon landing.

Anyone looking to get a first-person glimpse of engineers working to overcome these new obstacles might have taken the opportunity last March to visit the campus of the University of Bristol’s Smart Internet Lab.

Wonder world

It was the modern-day equivalent of the famous 1939 New York City World’s Fair demonstration of the wonders of network television. Here, in partnership with Nokia and BT, the Lab gave a public square demonstration of what it would be like for an individual to receive data at the speeds promised by 5G wireless.

Strolling around the grounds, you’d see demo participants wearing virtual reality headsets looking as though they were trying out for yet another “Walking Dead” sequel. In fact, they were experiencing not just the speed but the responsiveness of high-definition video sent by wireless servers connected to local transmitter hubs via 5G frequencies. You’d also have seen the results of a sophisticated 3D simulation depicting the entire city of Bristol, recreating the transmissivity and connectivity parameters for any two points in the city’s space, and working to resolve the issues of eliminating dead zones.

It was an impressive array of experiments, especially if you’re like me and you like peering under the hood to get a peek at how people and systems work together.

But if you’d peeked behind one of the tent canvasses, you would have noticed something peculiar: The demo team’s 32 x 4 multiple-input / multiple-output (MIMO) demonstration antenna, devised to receive signals relayed from Nokia base stations, was about the size of the backboard for a park bench.

Must Read: Report: Expected 4G vs 5G global penetration by 2025

An “end-to-end” test of 5G, as the Lab called it, depends on what you consider an “end.” The customer end was lacking a real-world 5G device, mainly because the problems of miniaturizing the antennas for such a device (what wireless engineers call the user equipment, or “UE”) are only now being tackled.

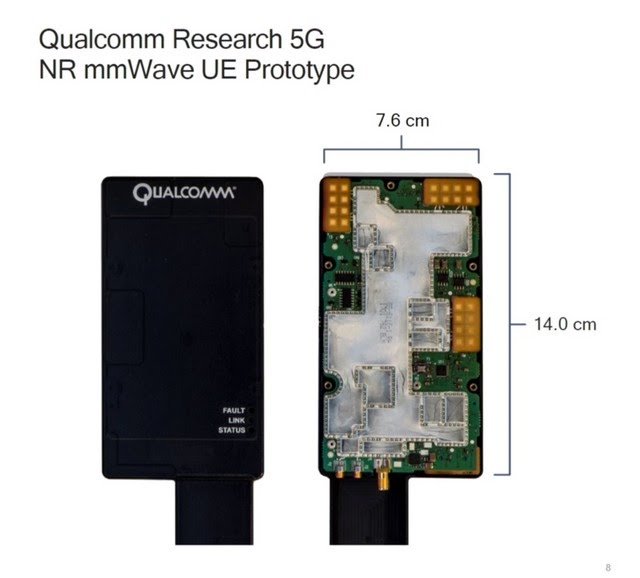

The long road to a working 5G device became much shorter in mid-July, with Qualcomm’s announcement that it has begun the sampling phase for its QTM052 millimeter-wave (mmWave) antenna modules (above). These are microscopic receivers embedded on chips, designed to be installed in smartphones and tablets, capable of sending and receiving signals at frequencies of 26 GHz and higher.

It’s the first 5G UE component whose inclusion could significantly alter our concept of what a “smart device” does. It could also, Qualcomm has hinted, have some impact on what it looks like.

Polar opposites

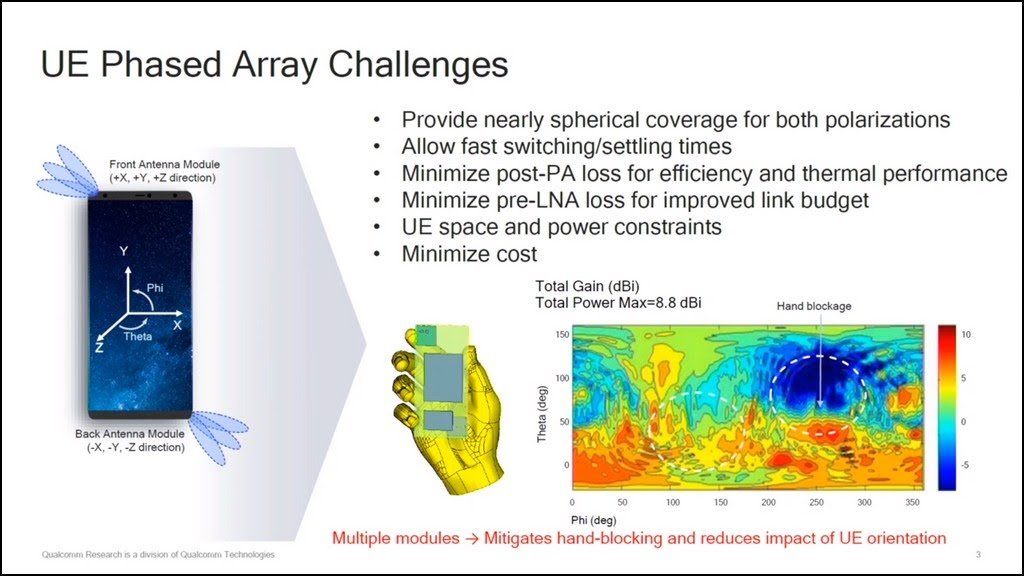

“We need spherical coverage for both polarizations,” said Ozge Koymen, Qualcomm’s senior director for 5G technology, speaking last April to a session of Brooklyn 5G Summit. “What that means, for a particular UE, is you want to ensure that you have enough active antenna modules around the phone, so that for V and H, you have spherical coverage.”

“V” and “H,” as he referred to them, are the two polar axes (vertical and horizontal) that a mmWave beam may take. If you’ve ever played with truly polarized sunglass lenses, you’ve seen that if you turn one lens 90 degrees and place it in front of the other, you can block out all light. Millimeter-waves have similar dynamics, such that a phone getting a clear mmWave signal, twisted 90 degrees in either direction, suddenly gets no signal at all.

Also: How shift to 5G will change just about everything

Qualcomm’s solution is to advise manufacturers to install at least two antenna modules in each device, preferably on opposite corners from one another. (The company’s own test device, pictures left, clearly includes three.) If the device is large enough, it’s unlikely that your hand would block both corners at the same time, regardless of which hand you use.

Hand blockage — in fact, any blockage — is the biggest obstacle for mmWave. You see, for anyone mmWave signal, its transmitter is not spherical or omnidirectional. It spreads from the transmitter in a wedge no wider than about 25 degrees.

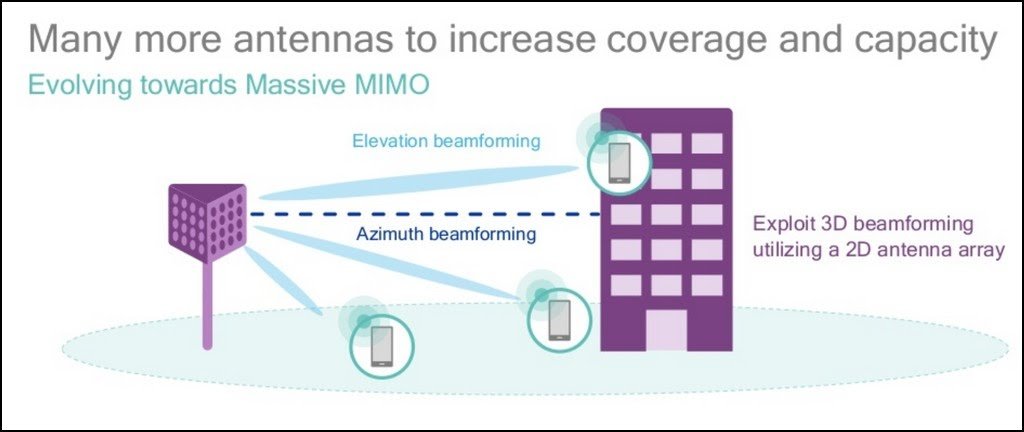

So, optimally, the transmitter should have a clear line of sight between itself and the receiving device. Since such a case will seldom happen, and only briefly, mmWave relies on a technique called beamforming to use intermediate hubs, mirrors, and even everyday metal objects to perform “bank shots,” if you will, steering the signal around obstacles to eventually reach its receiver. In a perfect world, the signal will be sent from multiple transmitters simultaneously, each one filling in 25 degrees or so of the 360-degree coverage area, for a higher probability that at least one signal reaches the UE.

Motion sickness

But what if this mobile device, as its own name implies, moves? Unlike any other cellular technology to date, mmWave will need to rely upon dynamic, flexible strategies for maintaining customer connections. Unlike video streaming, where unseen frames can be buffered in advance for when signal quality diminishes, the types of applications that would be made feasible by mmWave — for example, remote workstation computing — would rely upon persistent connectivity.

In fact, that’s one of the missions of the Bristol University lab: It uses laser-measured 3D maps of buildings and foliage, such as the above example, as input data for experimenting with how mmWave connectivity would fluctuate as users move from point to point within their coverage areas. It’s a live, and perhaps too vivid, demonstration of the wild and circuitous routing and re-routing tasks needed to facilitate every mmWave customer.

“Just the fact that we are beamformed, on the base station side as well as the UE handset side, doesn’t preclude us from being mobile,” explained Qualcomm’s Koymen in an interview with ZDNet. “It just means that beam forms are pointing to each other, but we periodically, in the background, search for new beams so that, as the UE moves or the environment changes, we can switch beams and we can track the user.”

Here is the primary thing that a 5G handset does differently from a 4G: It needs to give clues back to the base station as to how it’s moving in space, for what engineers call beam tracking. At lower frequencies, such as the “sub-6” band (below 6 GHz), it’s possible for devices to broadcast their position changes like submarine pings, and the base station will make adjustments and handoffs as necessary — not unlike previous cellular methodologies.

But at the highest end of the 5G spectrum, the base station and the UE exchange positioning signals back-and-forth in a process that looks a lot more like ping-pong. It’s not just a physically challenging process for telcos to implement, but also an expensive one.

This is why 5G devices with mmWave capability — those designed to handle these special bandwidths said to approach 1.6 Gbps, which may not be all of them — will need sustaining applications over and above simply buffering movie streams.

See also: Report: Expected 4G vs 5G global penetration by 2025

The cloud in your pocket

“Our long-term vision is something that we’re calling split processing,” explained Sherif Hanna, Qualcomm’s director of product marketing for 5G, speaking with ZDNet. “You have a cloud-enabled app running partially on your local device, that then taps into additional computing power — whether it’s CPU or GPU capability — from a cloud server hosted close to the user. It basically calls upon additional processing power on-demand.”

Hanna helps paint a picture of a 5G mmWave device as the hub of a hybrid workstation. It could be as compact as a smartphone or a small tablet, yet it could more easily connect with wireless displays and keyboard, mouse, and touchscreen inputs.

Put another way, a 5G device could become a kind of hybrid cloud portal, with hybridization on both the server and client sides. You’re already familiar with virtual desktops, and how enterprise data centers deploy and manage them. And you’ve seen where Samsung already produces a docking station for Galaxy S8 that enables its Qualcomm Snapdragon 835 processor to serve as the hub for a virtual PC.

Now, picture a future Qualcomm-powered device that blurs these lines a bit: It would run most of its applications locally, but when mmWave bandwidth is present, some high-performance applications — for instance, non-linear video editing, or arthroscopic surgery — would borrow compute and graphics power from the cloud data center. With latencies of 2 ms or lower, it would be like leveraging a full-featured graphics workstation as a co-processor.

As Hanna points out, this would open up smartphones to categories of applications that have not been physically feasible up to this point. You see, the Snapdragon 835 is a real CPU. But for it to act like a PC’s CPU, it would need an active cooling system — impossible for a handheld device.

If everything works out, at least within those metropolitan areas where mmWave service would be deployed, the handset may consume those remaining functions from the PCs of the 1990s and 2000s it has not already devoured. In fact, it may need to, in order for telcos to afford implementing the very techniques that will make mmWave functional in the first place.

Related coverage

2G, 3G, 4G and LTE: What’s the difference?

Videos: MTN, Ericsson demonstrate power of 5G